这份关于数据中心机柜冷却的权威指南,是您掌握热管理技术的一站式资源。本指南助力您确保 IT 基础设施的韧性、效率和可扩展性。

从核心原理到空气冷却、液体冷却、模块化冷却和浸入式冷却技术的深入比较,我们从大量文献中精炼出您需要了解的一切,帮助您选择数据中心机架冷却解决方案。.

我们重点解决关键痛点--消除热点、将 PUE 降低 0.1-0.5、将能耗降低 20-40%、避免每小时停机 $100000 次,同时探索机架布局、硬件配置、维护和人工智能驱动的自动化等关键优化因素。.

无论您是升级现有机架以提高密度、建设新的边缘数据中心,还是努力实现净零排放,本指南都能提供实用的框架、实际案例研究和面向未来的趋势,帮助您将机架冷却从劣势转变为竞争优势。.

对于数据中心经理、IT 工程师和设备运营商来说,这是一本克服热挑战、最大限度地提高机架安装设备的性能、使用寿命和可持续性的终极指南。.

1.数据中心机架冷却的核心原则

在深入研究技术之前,掌握有效机架冷却的基本原则至关重要。这些原则适用于所有数据中心规模和机架密度,是任何成功冷却策略的基础。.

1.1 热负荷

热负荷是机架 IT 设备和环境因素产生的总热量。它是选择和确定冷却解决方案大小的起点。.

IT 设备加热: 占机架总热负荷的 80-90%。计算方法是将所有设备的额定功率相加(例如,10 台服务器 × 750W = 7.5kW;4 个 GPU × 300W = 1.2kW → IT 总负载 = 8.7kW)。.

环境附加装置: 在 IT 负载中增加 10-20%,以抵消阳光、绝缘不良或邻近发热设备产生的热量。.

增长缓冲区: 包括一个 10-15% 缓冲区,以适应未来的硬件升级或机架扩展。.

重要见解: 热负荷制冷规模不足是数据中心运营商常犯的一个错误。Data Center Dynamics 在 2024 年进行的一项调查发现,有 38% 的设施由于热负荷计算不准确而出现热点。.

1.2 关键指标

要衡量和优化机架冷却,可跟踪这些行业标准指标:

PUE: 设备总功率 ÷ IT 负载功率。理想的 PUE 为 1.0,但机架级冷却效率会直接影响 PUE。例如,从风冷转为直接芯片液冷可将机架相关的 PUE 降低 0.2-0.3 。 .

机架入口/出口温度: 入口温度应保持在 18-24°C 之间;出口温度通常在 35-45°C 之间。如果三角洲大于 20°C,则表明气流不畅或冷却不足。.

气流速率: 以 CFM 为单位。高密度机架需要 1500-2500 CFM 才能保持安全温度,而低密度机架则需要 500-1000 CFM。.

湿度 40-60% RH 可以防止腐蚀和静电,这两种物质都会损坏机架安装的设备。.

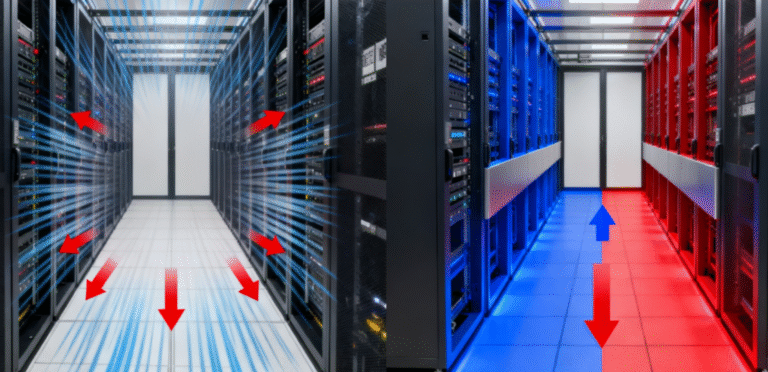

1.3 气流动力学

热斑--机架中的局部热袋--是 IT 硬件的隐形杀手。当冷送风与热排风混合,绕过服务器进风口时,就会产生热斑。解决之道在于优化气流动力学:

冷通道/热通道配置: 成排排列机架,使服务器进气口朝向 “冷通道”,排气口朝向 “热通道”。这样可减少 70% 的空气混合。.

隔离系统: 用物理屏障(面板、门或天花板)封闭冷通道或热通道,进一步隔离气流。完全封闭的过道可将 PUE 降低 0.1-0.3,并在 95% 的情况下消除热点。.

消隐板和电缆管理 空荡荡的机架插槽和杂乱无章的电缆会阻碍气流。安装封堵板来密封缝隙,使用垂直电缆整理器来保持过道畅通,可改善气流 15-20%。.

2.数据中心机架冷却技术

没有一种冷却技术适合所有的机架密度和使用案例。下面按机架密度详细介绍了最有效的解决方案,包括优点、缺点和实际应用。.

2.1 精密空气冷却

精密空调是企业级数据中心及中小型机房最常用的机柜冷却方案。该方案借助专用暖通空调系统,将冷却后的空气直接输送至机柜进风口。

精密空气冷却类型

- 直接扩展单元: 利用制冷剂冷却空气的独立系统。它们安装在机架上方或旁边,是中低密度小型数据中心的理想选择。.

- 规格 容量:10-50 千瓦/台;温度精度:±1°C;PUE 范围:1.3-1.6.

- 冷水空气处理器: 通过空气处理器循环冷水的中央系统。用于中等密度的超大型设施。.

- 规格 容量:50-200 千瓦/台;温度精度:±0.5°C;PUE 范围:1.2-1.5.

优点与缺点

- 优点 前期成本低,安装简单,维护量最小,与大多数机架配置兼容。.

- 缺点 对高密度机架而言效率不高,在密集设置中容易产生热点,与液体冷却相比能耗更高。.

实际应用

芝加哥一家中型金融服务公司为 20 个机架部署了 DX 精密空气冷却系统。通过冷通道控制,该公司的 PUE 值为 1.4,3 年来与冷却相关的停机时间为零,与标准 HVAC 相比,每年可节省 $12,000 美元的能源成本。.

2.2 液体冷却

当机柜密度超过 15 千瓦时,液冷技术就成了唯一可行的解决方案。其散热效率是空气散热的 4 至 10 倍,即便对于 50 千瓦及以上的高功率机柜,也能实现精准的温度控制。

机架液体冷却类型

- 直接芯片冷却 冷板安装在 CPU、GPU 和其他高热组件上。介电流体或水乙二醇混合物在板上循环,吸收热量并将其传递到热交换器。.

- 规格 容量:20-50 千瓦/机架;流体温度:20-30°C;PUE 范围:1.1-1.3.

- 最适合 人工智能/高性能计算机架、配备混合密度机架的主机代管设施。.

- 机架式浸入冷却: 服务器被浸没在一个机架大小的水箱中的非导电介质中。液体吸收热量,然后循环到内置的热交换器中。.

- 规格 容量:50-100 千瓦/机架;流体温度:30-45°C; PUE 范围:1.08-1.2.

- 最适合 超高密度机架、加密挖矿、专用高性能计算集群。.

优点与缺点

- 优点 消除热点,减少风扇能耗 70%,显著降低 PUE,可扩展以适应未来密度的增加。.

- 缺点 前期成本较高,需要流体管理和专门维护。.

实际应用

英伟达位于加利福尼亚州的人工智能研究实验室为 200 个 GPU 机架部署了直接到芯片的液冷系统。结果是PUE 从 1.5 降至 1.2,风扇能耗降低了 75%,硬件故障率降低了 40%。.

2.3 模块化冷却

模块化冷却系统由独立的机架兼容单元组成,可并行运行。人工智能驱动的控制装置可根据实时机架热负荷调整活动单元的数量,非常适合工作负荷不稳定或机架数量不断增加的数据中心。.

主要功能

- 容量 每个模块 10-40 千瓦;每个机架组可扩展 2-20 个模块。.

- 冗余: N+1 设计可确保设备出现故障时不会停机。.

- 控制装置 与 DCIM 工具集成,使冷却输出与负载相匹配,减少 30-40% 的能源浪费。.

优点与缺点

- 优点 按需付费模式,内置冗余,安装简便,无需停机,兼容空气和液体冷却。.

- 缺点 前期成本高于非模块化空气冷却系统,需要集成智能控制。.

实际应用

AWS 的俄亥俄州数据中心采用模块化液体冷却技术,为 500 多个机架提供可变密度。该系统在每个机架库 2-6 个模块的基础上进行扩展,能源成本降低了 35%,PUE 降低到 1.25。.

2.4 自由冷却

自由冷却利用室外冷空气或水来减少机械冷却运行时间,在温带或寒冷气候条件下可减少 50-70% 的能耗。作为辅助系统,它通常与精密空气或液体冷却系统搭配使用。.

自由冷却类型

- 气侧经济化 绕过机械冷却,将过滤后的室外空气引入数据中心。混合空气控制装置可保持机架的安全入口温度。.

- 最适合 每年有 6 个月以上时间室外温度低于 20°C 的温带气候。.

- 水边经济化: 使用室外冷水冷却冷却水回路,减少冷却器的运行时间。.

- 最适合 可使用冷水资源的超大规模数据中心。.

优点与缺点

- 优点 大幅降低能源成本和碳排放,与现有冷却系统相辅相成,维护成本低。.

- 缺点 依赖气候,需要空气/水过滤。.

实际应用

谷歌位于芬兰的数据中心每年有 11 个月使用空冷。该系统减少了 65% 的机架冷却能耗,使整个设施的 PUE 达到 1.1,是业内最低的。.

2.5 被动冷却

边缘数据中心通常拥有低密度机架和有限的电力,因此被动冷却是理想的选择。被动系统利用散热片、自然对流和隔热外壳散热,无需风扇或泵。.

主要功能

- 容量 ≤5kW/架;温度范围:18-30°C.

- 能源使用: 0 kWh;PUE 范围:1.0-1.1.

- 维护: 最小化。.

优点与缺点

- 优点 零冷却能耗、低维护、设计紧凑。.

- 缺点 仅限于低密度机架,在炎热的气候条件下效果不佳。.

实际应用

一家全球连锁食品杂货店在美国农村地区的 150 个边缘机架上部署了被动冷却系统。该解决方案将边缘冷却成本降低了 100%,并在两年内实现了 99.99% 的正常运行时间。 .

3.影响数据中心机架冷却效率的因素

3.1 机架布局和放置

避免热区: 不要将机架放在热源附近。Uptime Institute 在 2023 年进行的一项研究发现,靠近窗户的机架热点要多出 2 倍。.

审查要求: 在冷却装置和机架进气口/排气口周围保持 2-3 英尺的间隙。堵塞的通风口会使气流减少 30-40%。.

3.2 硬件配置和机架密度

IT 设备在机架内的排列方式和组件的总体密度直接影响冷却效率。配置不佳的机架会造成气流阻塞,即使使用先进的冷却系统,也会集中热量。.

服务器方向和垂直间距: 服务器的安装应保持一致的垂直间距,以便冷空气均匀流通。避免在同一垂直区域内 “堆叠 ”高热设备--这会产生局部热袋。ASHRAE 的《2023 年散热指南》强调,大功率服务器之间的垂直间距至少为 3U,可减少 60% 热点的形成。.

消隐板和填充板: 空机架槽是气流泄漏的主要来源--冷空气从缝隙中漏出,而不是流向服务器进气口。美国正常运行时间研究所(Uptime Institute)的一项调查发现,42% 的数据中心跳过了屏蔽板,导致冷却效率下降 15-20%。为所有空插槽投资 $20-$50 隔热板是投资回报率最高的冷却优化措施之一。.

高密度硬件缓解 超密集元件产生的集中热量会压垮标准冷却系统。对于这些设置,应使用 “热感知 ”机架设计:将高热设备放在机架底部或中部,并搭配直接芯片冷却。Schmidt 等人的研究表明,在 50 千瓦/机架的设置中,热感知机架可将机架峰值温度降低 4-6°C 。.

配电装置位置: PDU 产生的热量占机架总热量的 2-5%。将 PDU 安装在机架侧面,以避免阻挡气流,并选择高效 PDU 以尽量减少热量输出。.

案例举例: 达拉斯的一家主机托管提供商重新配置了 100 个混合密度机架,采用了热感知间距、隔板和侧装 PDU。在不升级冷却系统的情况下,他们将热点减少了 75%,并将整体机架冷却效率提高了 22%--使他们能够在不超过 ASHRAE 限制的情况下为每个机架增加 10% 的服务器。.

3.3 冷却系统的维护和保养

即使是最先进的冷却系统,如果没有适当的维护,其效率也会随着时间的推移而降低。美国正常运行时间协会(Uptime Institute)报告称,30% 的数据中心冷却故障是由于疏于维护造成的,而维护不善的系统的运行效率仅为原来的 60-70%。.

过滤器更换: 精密冷却装置和隔离系统中的空气过滤器会吸附灰尘、花粉和碎屑。过滤器堵塞会使气流减少 30-40%,迫使冷却系统更加努力地工作,从而增加 25-30% 的能耗。每 1-3 个月更换一次过滤器,并使用高效过滤器来保护设备和冷却线圈。.

线圈清洁: 空气冷却设备中的蒸发器和冷凝器盘管会积聚灰尘和污垢,降低传热效率。美国供暖、制冷和空调工程师协会 2024 年的一项研究发现,脏线圈会使冷却能耗增加 18-22%。每季度用压缩空气或专业的盘管清洁解决方案清洁盘管。.

制冷剂和流体检查: 对于液体冷却系统和 DX 空气设备,制冷剂不足或受污染的液体会降低冷却能力。每 6 个月检查一次制冷剂水平并测试液体冷却液,以防止腐蚀或泵故障。纽约一家金融服务公司由于 DX 设备中的制冷剂缓慢泄漏而停机,损失了 $50,000 美元。.

冗余测试: 如果备份冷却装置在需要时出现故障,N+1 或 2N 冗余就会失去作用。通过模拟主设备故障,每季度对冗余系统进行一次测试,确保备用设备在 2-3 秒内启动。Uptime Institute 的 2023 年全球数据中心调查发现,只有 58% 的设施定期测试冗余系统,导致 42% 的设施容易受到冷却相关故障的影响。.

3.4 温度之外的环境控制

虽然温度最受关注,但湿度和空气质量对机架冷却效率和硬件寿命同样至关重要。.

湿度调节: 如前所述,湿度超出 ASHRAE 建议的范围会损坏设备,但同时也会影响冷却性能。高湿度会增加空气密度,使风扇更难循环冷空气,并使热传导效率降低 10-15%。低湿度会增加静电,从而损坏服务器组件,并通过吸附灰尘破坏气流。具有双级加湿/除湿功能的现代冷却系统可保持最佳相对湿度,但校准是关键--传感器漂移会导致湿度水平不正确。使用 NIST 可追溯湿度计每季度校准一次传感器。.

空气质量和过滤: 灰尘、绒毛和空气中的颗粒会堵塞服务器通风口和冷却线圈,从而降低气流和散热效果。为数据中心投资 MERV 13+ 空气过滤器,为高密度设置投资机架级预过滤器。数据中心研究所的一项研究发现,改进空气过滤可将服务器维护成本降低 28%,并将冷却系统的使用寿命延长 3-5 年。对于位于工业或粉尘地区的数据中心,可考虑采用静电除尘器去除细小颗粒。.

3.5 监控、自动化和人工智能集成

“设置好就不用管 ”的冷却系统时代已经一去不复返了。现代数据中心依靠实时监控和自动化来优化机架冷却,尤其是在密度和工作负载变得更加动态的情况下。.

机架级监控 整个设施的温度传感器是不够的--在机架入口、出口和热区安装传感器,以跟踪局部情况。使用 DCIM 工具汇总数据,并对温度峰值或湿度偏差设置警报。Gartner 在 2023 年进行的一项调查发现,采用机架级监控的数据中心与冷却相关的故障会减少 40%。.

人工智能驱动的预测性冷却: 先进的 DCIM 平台集成了机器学习算法,可根据工作负载模式和天气条件预测热负荷。人工智能会主动调整冷却输出--例如,在预定的人工智能训练工作之前提升冷却,从而提高机架密度。微软的 Azure 数据中心使用人工智能驱动的冷却技术,将能耗降低了 25%,而谷歌的 DeepMind AI 则通过优化气流和温度设定点,将冷却成本降低了 40%。.

动态冷却调节: 自动化可使冷却系统适应实时变化。例如,模块化冷却装置可根据机架负载激活/停用,变速风扇可根据服务器需求调节气流。与静态冷却设置相比,这可减少 30-40% 的能源浪费。.

3.6 电源与冷却协同作用

冷却和电力基础设施之间存在内在联系,忽视它们之间的协同作用会导致效率低下和成本增加。.

UPS 热负荷管理: 不间断电源产生的热负荷占数据中心总热负荷的 5-10%。将 UPS 设备放置在单独的冷却区域,以避免其热量增加到机架环境中。选择高效的不间断电源系统,最大限度地减少热量输出,仅此一项就可将整个设施的 PUE 降低 0.05-0.1 。 .

动态电源管理集成: DPM 工具可根据工作负载调整服务器功耗。如果与冷却自动化搭配使用,DPM 可将功耗和冷却成本降低 15-20%。例如,在非高峰时段,DPM 会将闲置服务器置于低功耗模式,从而降低热负荷,并允许冷却系统缩减规模。.

可再生能源与冷却系统的协调: 对于使用太阳能或风能的数据中心,应使冷却运行时间与可再生能源发电保持一致。例如,在太阳能输出较低的时段使用免费冷却,而在日照高峰时段则依靠机械冷却并辅以太阳能。这一策略帮助谷歌俄克拉荷马州的数据中心在使用 80% 可再生能源的同时,实现了 1.12 的 PUE。.

4.克服高密度机架冷却难题

高密度机架带来了独特的冷却挑战--集中的热负荷、有限的气流和极高的精度要求。以下是应对这些挑战的行之有效的策略,并附有行业案例研究。.

4.1 高密度机架空气冷却的局限性

当机架的功率超过 15 千瓦/机架时,空气冷却就会失效,因为空气的热容量低,无法快速消散集中的热能。Data Center Dynamics 2022 年的一项研究发现,风冷高密度机架出现热点的频率是液冷机架的 3 倍,其冷却能耗比液冷机架高 40-60%。.

重要见解: 对于 15 千瓦以上的机架,液体冷却不仅是 “更好 ”的选择,而且是必要的。直接对芯片冷却可处理 20-50 千瓦/机架,而浸入式冷却则可达到 100 千瓦以上/机架。.

4.2 超高密度机架战略

超高密度机架需要专门的冷却解决方案和设计考虑:

两相浸入式冷却: 这项技术将服务器浸没在电介质液体中,电介质液体蒸发吸热。蒸气在冷却线圈上凝结成液体,形成一个近乎完美传热的闭环系统。两相浸入式冷却的 PUE 值低至 1.05,即使在 100 千瓦/机架的设置中也能完全消除热点。.

案例举例: 德克萨斯州的一家加密货币采矿设施为 50 个机架部署了两相浸入式冷却系统。与空气冷却相比,该系统减少了 55% 的冷却能耗,由于热条件稳定,服务器的寿命延长了 30%。.

机架式液体冷却回路: 对于高性能计算集群,机架集成液体冷却回路可将冷却液直接输送到每台服务器的冷板上。这些回路具有可扩展性、冗余性,并与标准服务器机架兼容,因此非常适合改造现有的高密度设置。.

蓄热一体化: 对于热负荷可变的机架,蓄热系统可在峰值期间吸收多余热量,从而降低冷却系统的负荷。斯坦福大学的一个高性能计算实验室使用相变材料来处理其 40 千瓦/机架设置中的 30 千瓦峰值,从而避免了升级冷却能力的需要。.

4.3 改造现有机架以提高密度

许多数据中心需要在不重建冷却基础设施的情况下提高机架密度。下面介绍如何经济高效地做到这一点:

为风冷机架增加直接芯片冷却功能: 可改装的冷板套件可安装在现有服务器上,以处理增加的热负荷。这样,机架的功率就可以从 10 千瓦提高到 25 千瓦,而无需更换主风冷系统。.

升级隔离系统: 用完全密封的冷通道和可变风量风门取代部分密封。这可将气流效率提高 25-30%,从而在现有冷却条件下实现更高的密度。.

实施区域冷却: 针对密集机架中的热点区域增加小型机架式冷却装置。这些辅助系统的成本为 $5k-$10k/台,可延长现有冷却基础设施的使用寿命。.

案例举例: 佛罗里达州的一家医疗保健数据中心对 30 个风冷机架进行了改造,安装了直接到芯片的冷板和全封闭冷通道。他们在不升级冷却水系统的情况下,将密度提高到了 22kW/机架,节省了 $200k 的冷却基础设施成本。 .

5.数据中心机架冷却的趋势

数据中心机架冷却的未来由三股关键力量驱动:机架密度的增加、全球可持续发展的要求以及技术的进步。以下是值得关注的最具影响力的趋势。.

5.1 人工智能驱动的自主冷却

人工智能将从预测性调整发展到完全自主的实时自我优化冷却系统。这些系统将整合来自服务器、冷却装置、天气预报和能源网的数据,从而做出兼顾效率、性能和成本的决策。例如,自主系统可以在发电高峰期将冷却系统转移到可再生能源上,根据硬件健康数据调整机架温度,并在冷却问题影响运行之前进行自我诊断。Gartner 预测,到 2026 年,60% 的超大规模数据中心将采用自主冷却系统,冷却能耗将减少 30%。.

5.2 可持续和零碳冷却

随着净零目标的逼近,数据中心正在转向碳中和冷却解决方案:

零水冷却 干式冷却器和风冷式冷却器正在取代耗水量大的冷却塔,以解决缺水问题。Coolcentric 等公司提供的零水液体冷却系统使用空气冷却热交换器,完全不需要用水。.

中密度机架的被动冷却系统: 散热器设计和相变材料的进步使被动冷却的功率可达到 10-15 千瓦/机架。这将使边缘数据中心和小型设施在没有机械冷却的情况下实现 PUE <1.2。.

可再生能源液体冷却: 太阳能或风能泵和热交换器正被集成到液体冷却系统中,形成完全可再生的冷却回路。.

5.3 下一代浸入式冷却技术

随着流体技术的改进和成本的降低,浸入式冷却将成为主流:

环保型介电流体: 生物基、无毒和可回收的流体正在取代石油基流体,从而减少对环境的影响。.

开放式机架沉浸系统 新的设计可以在不排空液体的情况下访问服务器,从而使维护工作更加轻松,减少停机时间。.

边缘机架浸入式冷却: 目前正在为边缘数据中心开发机架大小的紧凑型浸入式水箱,以便在电力有限的偏远地区实现高密度计算。.

5.4 热能收集

机架冷却产生的废热将被重新用于其他用途,使数据中心成为 “热能枢纽”。例如,液体冷却回路产生的热量可用于温暖办公楼、温室或市政供水。斯德哥尔摩的一个数据中心已经收集了 80% 的废热,可为 1 万户家庭供暖,预计到 2030 年,这一趋势将扩展到欧洲 40% 的数据中心。.

6.结论

数据中心机架冷却不再是辅助功能,而是影响性能、成本、可持续性和可靠性的战略性资产。随着机架密度的持续上升和全球能源需求的不断紧缩,成功的关键在于:

- 从基础开始 计算准确的热负荷,优化机架布局和气流,并投资于适当的防护措施。.

- 根据密度匹配冷却技术: 中低密度采用空气冷却,高密度采用直接芯片液体冷却,超高密度采用浸入式冷却。.

- 优先进行维护和监测: 定期维护和实时监控可防止效率损失和停机。.

- 拥抱创新: 采用人工智能驱动的自动化、可持续冷却解决方案和面向未来的技术,如浸入式冷却。.

通过遵循这一框架,数据中心运营商可以构建机架冷却系统,不仅能满足当前的需求,还能应对未来的挑战--无论是 100 千瓦/机架的人工智能机架、净零碳目标,还是分布式边缘计算。.

最成功的数据中心不仅会冷却机架,还会利用机架冷却作为竞争优势,降低成本,提高可靠性,引领可持续数字基础设施的发展。.

如需根据您的设施密度、预算和可持续发展目标进行定制机架冷却评估,请联系认证数据中心冷却专家,以确保您的策略面向未来。.

参考文献: