This article, supported by data, provides in-depth technical analysis and case studies related to practical applications, answering key questions about why data center liquid cooling solutions is superior to air cooling, offering valuable insights for IT Leaders and Data Center Operators.

In an era of rapidly growing AI workloads, HPC, and power density, data center cooling has evolved from an “auxiliary function” to a key driver of improved efficiency, reliability, and cost reduction. Air cooling, a traditional standard used for decades, is now approaching its performance limits, while liquid cooling solutions for data centers are increasingly becoming the preferred choice for forward-thinking enterprises.

How Efficient Is The Heat Transfer Of Liquid Cooling Solutions?

Heat transfer efficiency is the foundation of effective cooling—and liquid cooling dominates here.

Liquids (such as dielectric coolants or water-glycol mixtures) have inherent thermal properties that air simply can’t match:

Liquids conduct heat 23–25 times faster than air. For example, water has a thermal conductivity of ~0.6 W/(m·K) at 25°C, while air is only ~0.026 W/(m·K).

Liquids can absorb 3000+ times more heat per unit volume than air before experiencing a temperature rise. This means liquid cooling solutions can remove heat from critical components (CPUs, GPUs, ASICs) directly and at scale.

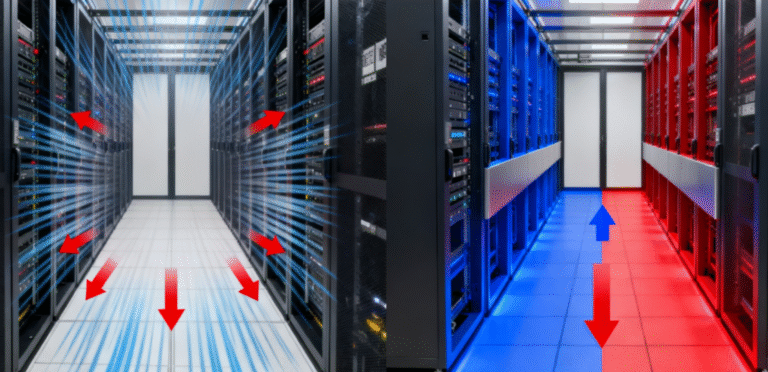

Air cooling, by contrast, relies on forced convection to move heat from components to the surrounding air—a process hindered by multiple thermal resistances (e.g., from the chip to the heatsink, heatsink to air, and air to the HVAC system). These resistances create “hotspots” (common in dense GPU racks) and limit cooling capacity.

Air cooling maxes out at ~10–15 kW per rack for most data centers. Data center liquid cooling solutions—especially immersive or cold-plate designs—easily handle 50–100 kW per rack, with some HPC facilities pushing 200+ kW. This makes liquid cooling indispensable for AI/ML workloads, where a single GPU can consume 400+ watts.

Can Liquid Cooling Solutions Deliver Lower PUE Than Air Cooling?

Yes—by a significant margin. PUE is the gold standard for data center efficiency, and liquid cooling drives PUE to levels air cooling can’t reach.

Typical air-cooled data centers have a PUE of 1.5–1.8 (meaning 50–80% of total energy goes to non-IT needs like cooling). Even “efficient” air-cooled facilities rarely dip below 1.4.

Modern data center liquid cooling solutions achieve PUEs of 1.05–1.15. Immersive liquid cooling, which eliminates server fans and reduces HVAC reliance, can hit 1.02–1.08 in optimal conditions.

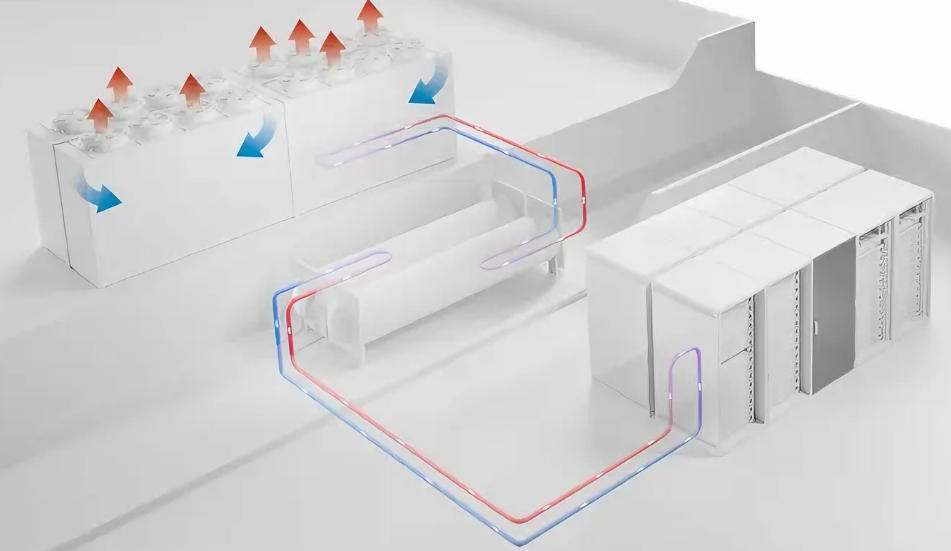

Liquid cooling removes heat directly from components, cutting out energy-wasting middle steps (e.g., server fans, which consume 10–25% of IT power, and large CRAC/CRAH units).

Many liquid cooling systems use “free cooling” year-round: cold outdoor air or water (without mechanical refrigeration) cools the circulating liquid, further slashing energy use.

A hyperscaler’s AI data center using immersive liquid cooling for 500 GPU servers (50 kW/rack) achieved a PUE of 1.08—saving 400,000+ kWh annually compared to an air-cooled equivalent (PUE 1.6).

How Do Liquid Cooling Solutions Support Higher Power ?

The rise of AI and HPC has pushed rack power densities from 5–10 kW (traditional) to 30–100+ kW (modern). Air cooling can’t keep up—liquid cooling is the only viable solution for dense deployments.

At densities above 15 kW/rack, air cooling requires impractically large fans, wider hot aisles, and oversize HVAC systems. Hotspots become unavoidable, leading to component throttling or failure.

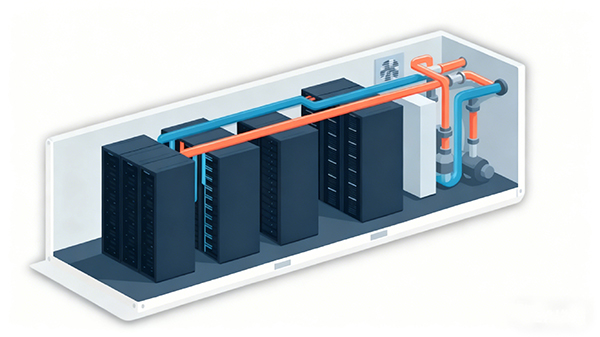

Liquid cooling flexibility:

Attaches directly to CPUs/GPUs, cooling individual components at 30–50 kW/rack—ideal for retrofitting existing servers.

Submerges entire servers in dielectric coolant, supporting 50–100+ kW/rack with no hotspots.

Liquid cooling eliminates the need for large air ducts, fan trays, and wide aisles. Data centers can reduce floor space by 30–50% while hosting 2–3x more computing power.

Organizations can scale compute capacity without expanding data center footprint—critical for urban locations where real estate is costly.

Are Liquid Cooling Solutions More Reliable Than Air Cooling?

Yes—liquid cooling reduces downtime risks and extends hardware lifespan.

Liquid cooling maintains component temperatures within ±1°C, compared to ±5–8°C for air cooling. Consistent temperatures minimize thermal stress on chips, capacitors, and other sensitive parts, reducing failure rates by 30–50%.

Air cooling relies on thousands of server fans and HVAC blowers—all prone to wear, dust buildup, and failure. Liquid cooling systems have far fewer moving parts (e.g., pumps, valves), cutting annual failure rates from 3–5% (air) to 0.5% or lower (liquid).

Immersive and closed-loop liquid cooling solutions are sealed, preventing dust, humidity, or corrosive particles from reaching components. This is especially valuable in industrial or coastal data centers, where air quality is poor.

Reduced hardware failures and longer server lifespans (extended by 15–20% with liquid cooling) lower replacement costs and unplanned downtime—saving organizations $100,000+ annually for mid-sized data centers.

Do Liquid Cooling Solutions Support Sustainability Goals?

Absolutely—liquid cooling is a cornerstone of “green data centers” and carbon reduction strategies.

By cutting PUE and reducing energy use, liquid cooling slashes Scope 2 emissions (from grid electricity). A 1 MW data center with liquid cooling (PUE 1.1) emits 30–40% less CO₂ than an air-cooled equivalent (PUE 1.6).

Contrary to myth, many liquid cooling solutions use less water than air cooling. Air-cooled data centers rely on evaporative coolers (which consume 20–40 gallons of water per kW annually), while closed-loop liquid cooling systems use minimal water (or none at all for dielectric coolant-based designs). Cold-plate liquid cooling can reduce water usage by 45% vs. air cooling.

Liquid cooling captures heat at 40–50°C (vs. 25–30°C for air cooling), making it usable for district heating, office warming, or industrial processes. This “recycled” heat can offset 30%+ of a building’s heating energy needs, turning data centers into net energy contributors.

What Operational Benefits Do Liquid Cooling Solutions Offer?

Beyond efficiency and reliability, liquid cooling improves day-to-day operations and workplace conditions:

Server fans are reduced or eliminated. Liquid-cooled data centers operate at 40–50 dB (similar to an office) vs. 65–75 dB (air-cooled, equivalent to a vacuum cleaner). This improves working conditions and allows data centers to be built closer to urban areas.

Liquid cooling systems require less frequent checks than air cooling (e.g., no fan cleaning, filter replacements, or duct inspections). Many modern solutions include remote monitoring for coolant levels, pump performance, and temperature—reducing on-site maintenance time by 20–30%.

As power densities continue to rise (expected to hit 200 kW/rack by 2030), air cooling will become obsolete. Investing in data center liquid cooling solutions today avoids costly retrofits later and ensures compatibility with next-gen hardware (e.g., quantum computing, advanced AI accelerators).

What Role Does a CDU Play in Liquid Cooling Solutions?

A CDU (Cooling Distribution Unit) is the “central nervous system” of liquid cooling systems—and a key reason they outperform air cooling in scalability, precision, and reliability. Unlike air cooling (which lacks a comparable centralized control component), data center liquid cooling solutions depend on CDUs to manage the entire coolant lifecycle, making them indispensable for high-density deployments.

Core Functions of a CDU:

CDUs house pumps (often variable-frequency drives, VFDs) that circulate coolant (water-glycol or dielectric fluid) between the IT equipment (servers, GPUs) and the external cooling source (e.g., chillers, dry coolers, or free cooling systems). They maintain consistent pressure (typically 2–4 bar) to ensure uniform cooling across all racks—eliminating the “flow imbalance” that causes hotspots in air-cooled setups.

CDUs integrate heat exchangers (e.g., plate-and-frame or shell-and-tube) to adjust coolant temperature before it reaches IT components. Advanced CDUs achieve ±0.5°C temperature accuracy—far tighter than air cooling’s ±5–8°C. For example, a CDU can supply coolant at 22–24°C to cold plates, ensuring GPUs run at optimal operating temperatures (35–45°C) even under 100% load.

CDUs include high-efficiency filters to remove debris, corrosion particles, or air bubbles from the coolant loop. This prevents clogs in cold plates or server cooling channels— a common failure point in poorly managed liquid systems—and extends the lifespan of pumps and valves.

Modern CDUs include real-time sensors for temperature, pressure, flow rate, and coolant level, paired with remote management software (e.g., BMS integration). They also incorporate safety mechanisms: leak detection (via moisture sensors), automatic shutoff valves, and bypass loops to prevent downtime if a component fails.

CDUs are modular, allowing data centers to add capacity as rack densities increase (e.g., from 50 kW/rack to 100 kW/rack). VFD pumps adjust speed based on cooling demand, reducing energy consumption by 15–25% compared to fixed-speed pumps.

Why Data Center Liquid Cooling Solutions Are the Future

Air cooling technology has served the data center industry for decades, but it can no longer meet the demands of artificial intelligence, high-performance computing, and high-density computing. Liquid cooling solutions for data centers offer superior heat transfer, lower PUE values, higher power density support, greater reliability, and enhanced sustainability—all contributing to reduced long-term costs.

For IT leaders and data center operators, the question is no longer “Should we adopt liquid cooling?”, but “When?” The answer is: the sooner the better. With increasing workloads and rising energy costs, liquid cooling is more than just an upgrade—it’s a strategic necessity for maintaining competitiveness, efficiency, and sustainability.

If you’re considering transitioning to liquid cooling, start with a pilot project, such as a cold plate cooling retrofit of a GPU rack, to assess ROI and gain practical experience. The benefits—lower energy costs, less downtime, and a smaller carbon footprint—will be self-evident.