Liquid Cooling Data Center

Delivered Anywhere.

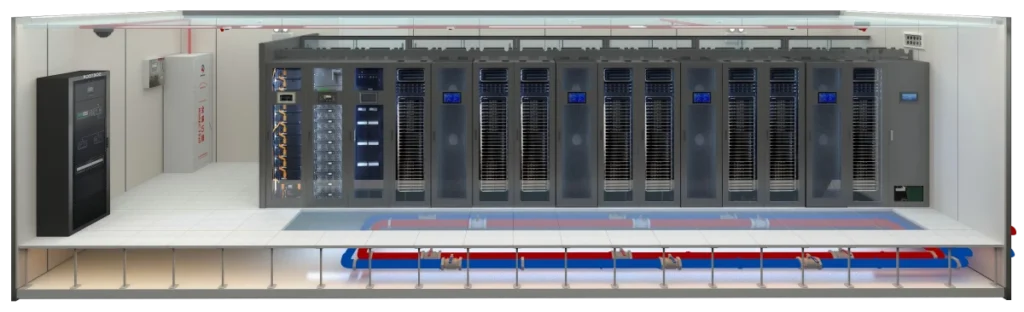

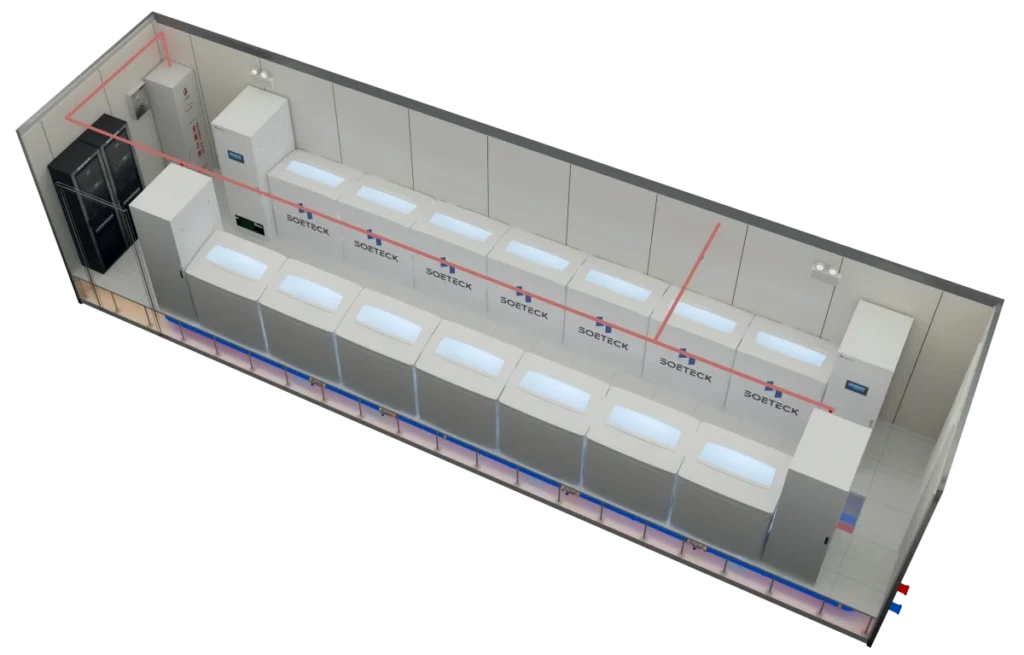

Liquid Cooling has been the next-gen thermal management solution for data centers in the AI era. Soeteck AICoolit™ integrates Direct-to-Chip or Immersion cooling into ISO standard containers, enabling 100kW per rack density. Bypass lengthy construction cycles and get your compute cluster operational in weeks.

Why Liquid +Container?

Choose Your Scale

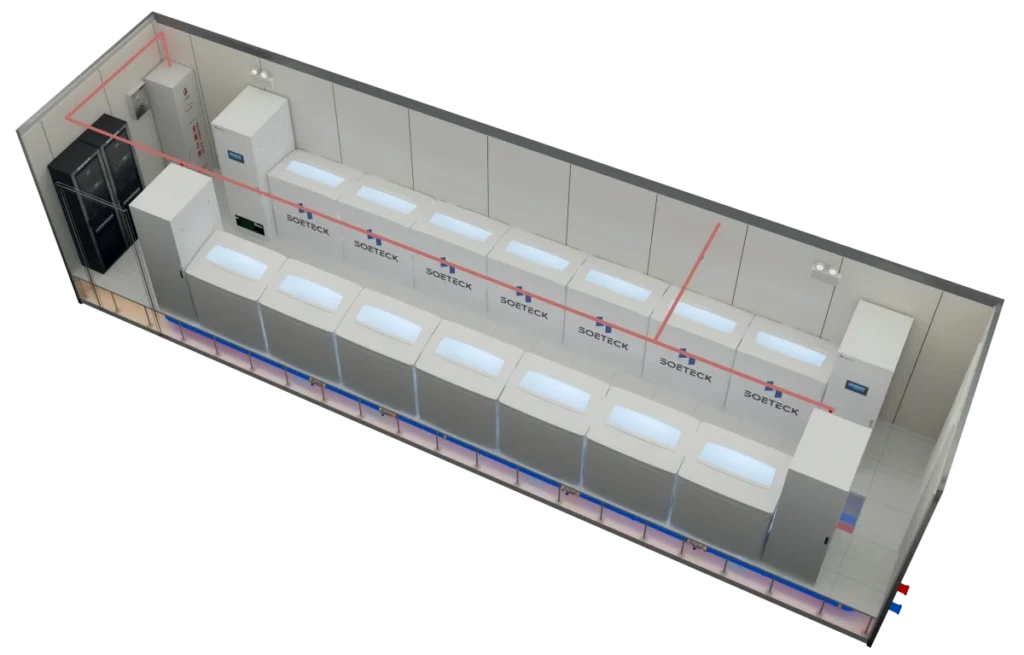

High-Density Cluster Module

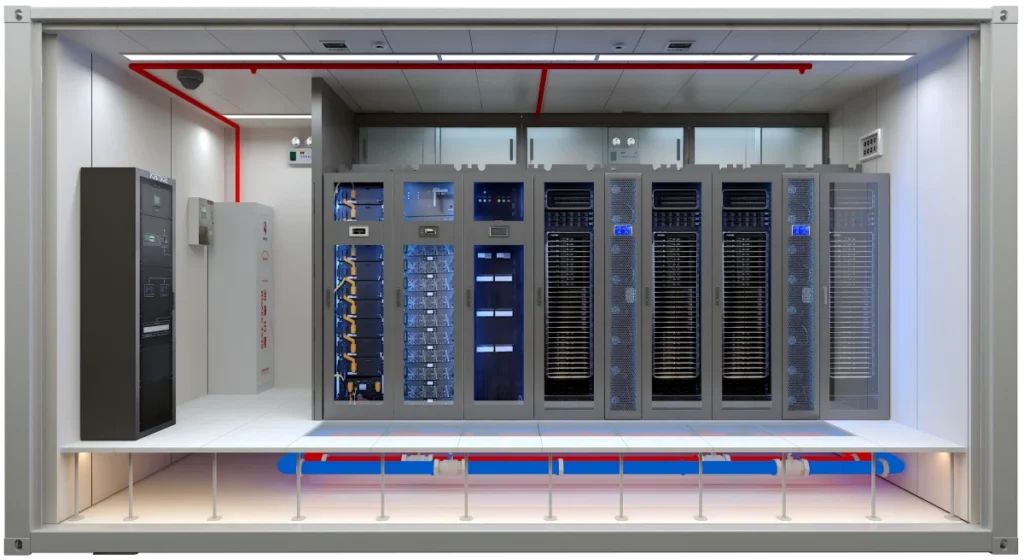

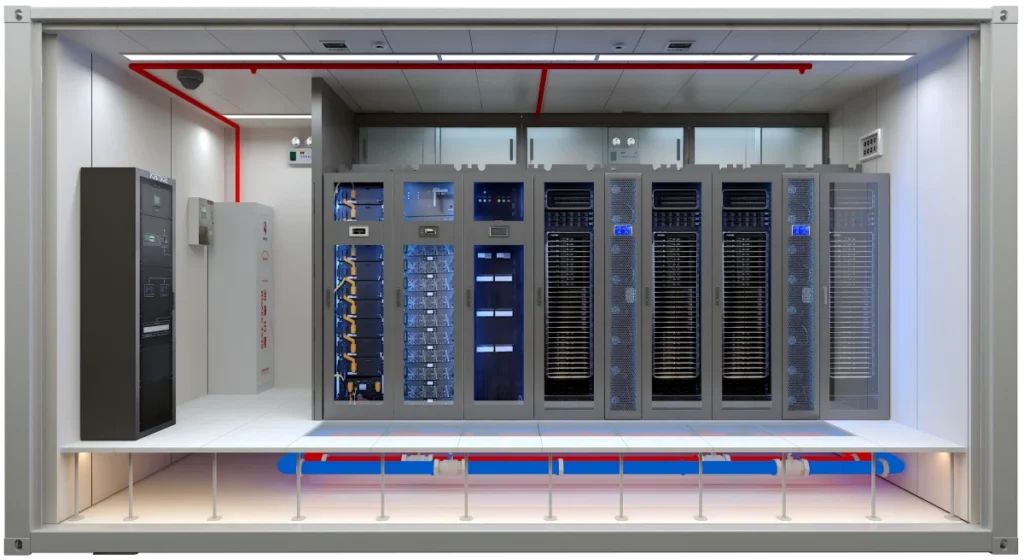

Integrated Standard Module

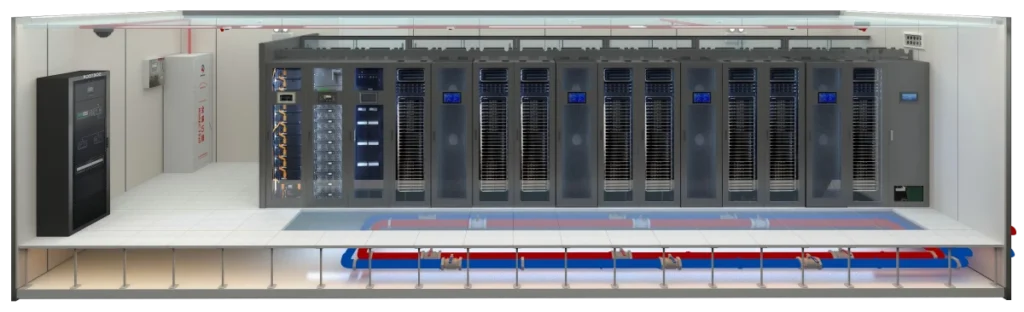

Edge Compact Module

Immersion Cooling Module

Engineering Core

Industrial-Grade ISO Shell

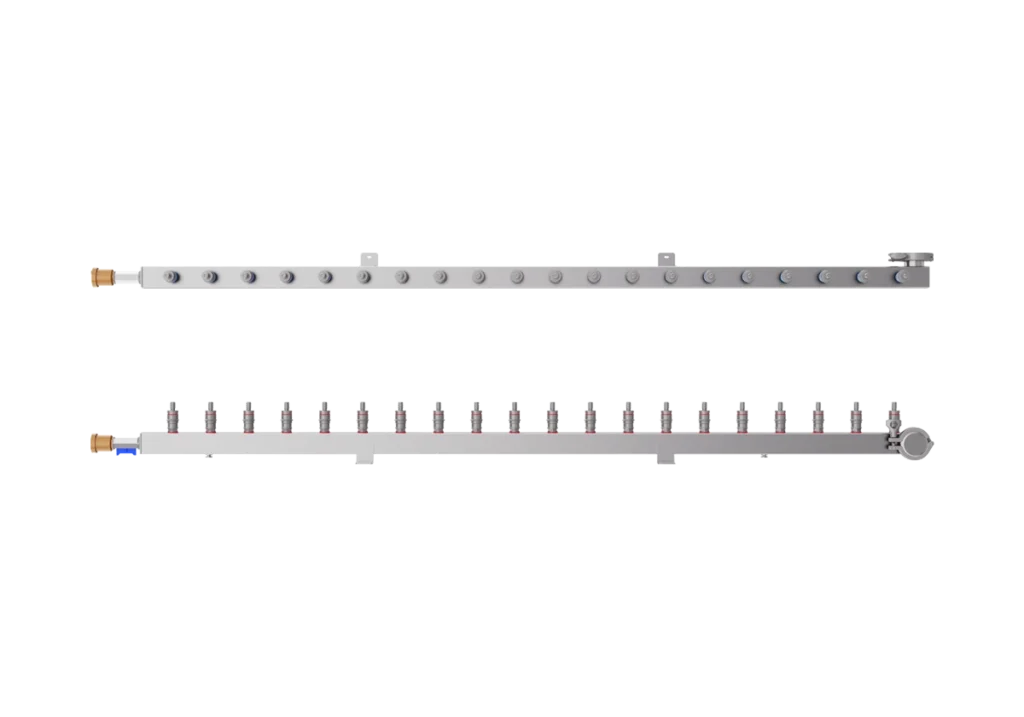

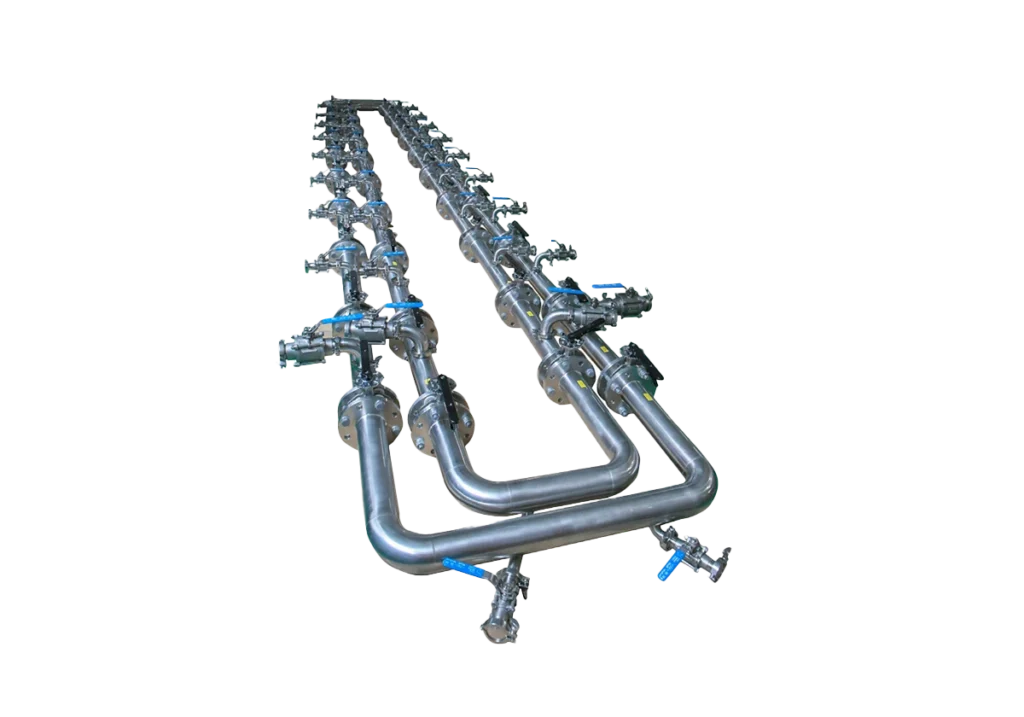

Precision Liquid Loop

Dry Cooler

Dry Coolers & Cooling Towers

Built for Scale.

Delivered Globally.

Expert Answers

What happens if a liquid leak occurs?

We employ a “Defense-in-Depth” strategy. First, our Negative Pressure design ensures that if a micro-leak occurs, air is sucked in rather than fluid spraying out. Second, intelligent leak detection sensors (rope & spot) are deployed at every manifold joint and CDU. If moisture is detected, the system instantly isolates the affected loop and alerts operators, preventing damage to IT equipment.

Do I need specialized staff to maintain the liquid loop?

No. The AICoolit system is designed to be “set and forget”. Routine maintenance is minimal—primarily checking fluid levels and filters annually. Our CDUs feature self-diagnostic capabilities. For server maintenance, our non-spill UQD connectors allow IT staff to hot-swap blades just as easily as in air-cooled racks, with no special plumbing skills required.

Is it compatible with NVIDIA H100/Blackwell GPUs?

Absolutely. Our liquid cooling architecture is specifically engineered for high-TDP chips (up to 1000W+ per socket). We support standard OCP cold plates that fit NVIDIA, AMD, and Intel accelerators. Whether you are running HGX H100 clusters or future Blackwell architectures, our 100kW/rack density provides ample thermal headroom.

Can these units operate in extreme climates?

Yes. The container shell is IP65 rated and R-30 insulated, decoupling the internal environment from the outside. For heat rejection, our adiabatic dry coolers maintain cooling efficiency even in ambient temperatures up to 50°C (122°F). We have successful deployments in Middle Eastern deserts and tropical Southeast Asian regions.

How fast can we deploy a 1MW cluster?

Traditional builds take 18-24 months. With AICoolit, we can deliver a factory-tested 1MW module in 5-10 weeks. Once on-site, installation involves simple power and water hookups, typically taking less than a week to commission. This allows you to start training your models months ahead of the competition.

Start Your AI Journey Today

Configure your liquid-cooled data center and get a preliminary engineering layout within 24 hours.